5 min read

How the Online Safety Act Is Changing Age Assurance in the UK

June 25, 2025

From July 2025, online services in the United Kingdom hosting pornographic or harmful content must have “highly effective” age verification systems in place to stop children from accessing this material. This is part of the UK’s Online Safety Act, enforced by Ofcom.

Services that don’t follow the rules face fines of up to £18 million or 10% of global turnover, whichever is higher.

What Is the Online Safety Act?

Passed in October 2023, the Online Safety Act is a UK law making online platforms responsible for reducing harm to users, especially children.

Ofcom, the UK’s communications regulator, is responsible for enforcing the Act and is phasing in the obligations in phases:

1. Illegal Content Duties Since March 2025, service providers must prevent and remove illegal content such as terrorism, child abuse material, and fraud following the measures set out in the illegal content Codes of Practice.

2. Content Harmful to Children Duties Service providers must block children from accessing harmful but legal content, including pornography, self-harm or suicide promotion and eating disorder content.

This is the main area affecting adult sites and where Highly Effective Age Assurance (HEAA) comes in. Ofcom requires age checks for:

- Part 5 services (sites hosting their own adult content) — compliance deadline was 17 January 2025

- Part 3 services (user-generated adult content) — HEAA must be in place by 25 July 2025

All services likely to be accessed by children must also complete a Children’s Risk Assessment and take proportionate steps to prevent harm. The Act uses a risk-based approach. It recognises that platforms differ in size, resources, and the level of risk they pose.

3. Categorised Services Duties Certain large or high-risk services will face additional obligations, including publishing transparency reports on how they assess online safety risks and what they’re doing about them, and provide clear tools for users—especially children and parents—to report issues or harmful content.

Who Is Affected by the Online Safety Act?

The rules apply to any online service accessible from the UK that hosts pornographic or similarly harmful content, allows users to upload such content or is likely to be accessed by children.

This includes both UK-based and international services.

Ofcom estimates that over 100,000 online services may be affected by the rules. Services likely to fall under the Act include:

- Gaming platforms

- Video streaming or gaming sites

- Dating apps and websites

- E-commerce sites with user interaction

- Social media platforms

- Adult content websites

What Counts as "Highly Effective" Age Assurance?

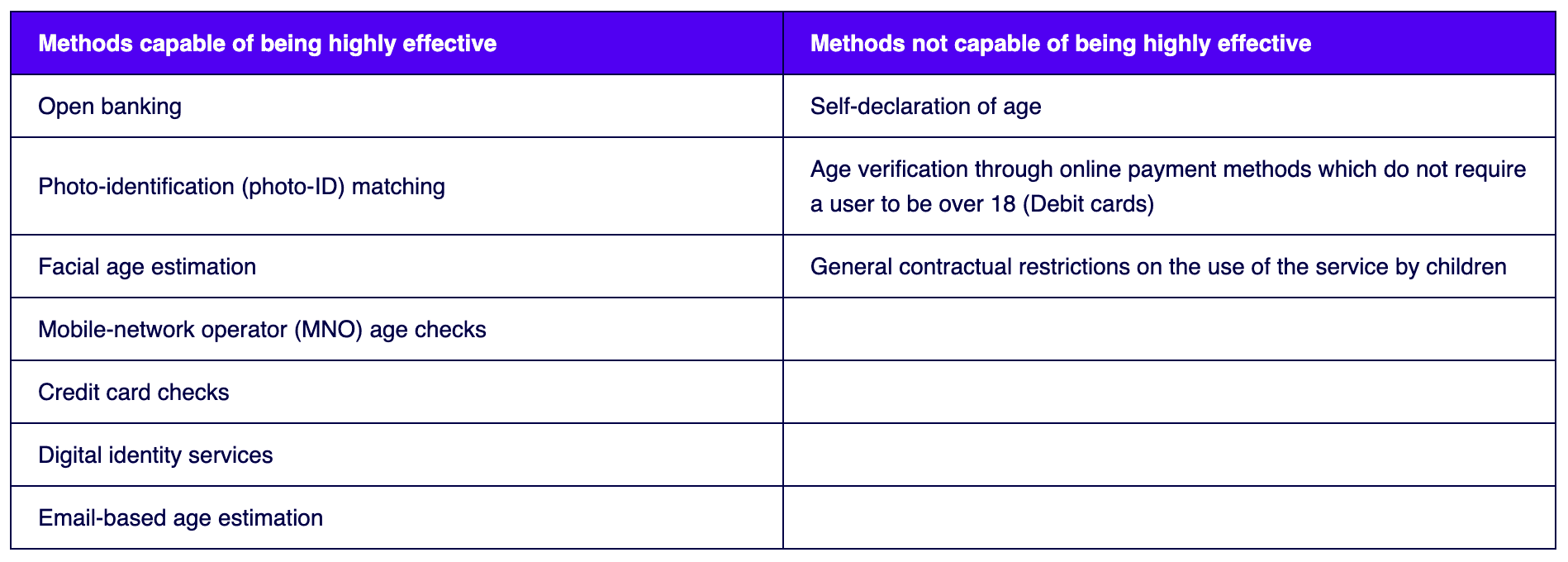

Ofcom has made it clear: not all age verification methods are acceptable. To be considered highly effective, an age assurance method must greatly reduce the risk of under-18s accessing adult content.

Methods not considered “highly effective”:

- Self-declaration (e.g., ticking a box saying “I’m over 18”)

- Credit/debit card payments without identity checks

- Only placing age restrictions in T&Cs without technical enforcement

- Email or SMS verification with no proof of age

These methods are considered too weak and non-compliant.

What Can Be “Highly Effective Age Assurance”?

Ofcom recognises several methods that can meet the standard for highly effective age assurance, but only if they are implemented properly and meet all four key criteria:

- Technically accurate – It must correctly estimate or verify a user’s age with a high degree of accuracy.

- Robust – It must be secure and resistant to attempts to bypass it.

- Reliable – It should perform consistently across different users and conditions.

- Fair – It must not discriminate and should work equally well for all users.

However, effectiveness alone isn’t enough.

Some methods—like uploading ID documents or credit card details—may meet the technical requirements but come with significant privacy and usability drawbacks. They can discourage users and increase the risk of data exposure.

To ensure compliance without compromising user trust or experience, platforms should aim for solutions that are both effective and privacy-preserving, and this is where Gataca comes in.

How Gataca Can Help

Gataca provides two age assurance solutions that meet Ofcom’s “highly effective” standard while protecting user privacy and providing them the best user experience:

Facial Age Estimation – users simply take a selfie on their phone. Our AI estimates their age, confirms they’re a real person through liveness detection, and grants access—no ID uploads, no stored images, and no friction.

Gataca App (ID Wallet) – Users complete a one-time identity check and receive a proof of age credential stored securely in their digital wallet. They can then share this with one click to access your site and reuse it anywhere digital ID wallets are accepted.

Your platform receives only a binary result—access granted or denied. No personal information like age, birthdate, or identity is shared. This not only ensures full compliance with the Online Safety Act but also removes the burden of handling or storing sensitive data, keeping users anonymous, secure, and in control.

Final Thoughts

The Online Safety Act has raised the bar for age verification across all online platforms, especially adult content sites. The deadline is approaching fast—25 July 2025 for most platforms—and non-compliance is not an option.

If you haven’t already, begin assessing your current age verification methods and switch to a system that meets Ofcom’s expectations. Doing so protects your users, your business, and ensures you’re operating within UK law.

Esther Saurí

Digital Marketing Specialist